Most organizations are slowly moving their data-related projects to the cloud. Why? Because of the benefits such as easy scaling and flexibility, cost-effective offerings and secure backup and recovery. And this migration is a great opportunity to unify your siloed data. But which tool should you opt for to move your data to the cloud?

Microsoft’s answer to this is Azure Data Factory (ADF). It’s one of the best tools available in the market to move, integrate, and prepare your data on the cloud premises.

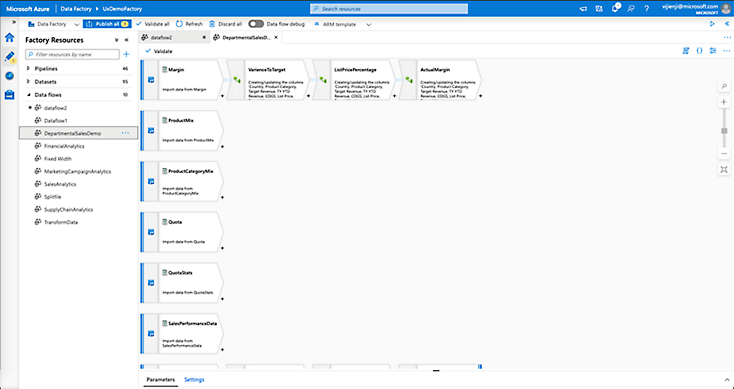

Image Source: Microsoft

Let’s see the 10 benefits of feature-rich ADF that would come in handy when shifting your data stores to the cloud.

1. Hybrid Data Integration at Scale

Organizations are collecting humongous data daily. When data comes from a variety of sources, as disparate as multi-cloud, SaaS apps, and On-premise, collecting, ingesting, and processing data could be a huge challenge. Not only can it cause integration and quality issues, but time and effort would also be at stake.

Microsoft ADF offers just the right solution to handle such issues. ADF provides a unified data integration experience that enables you to integrate data from a variety of sources, including on-premises data warehouses, cloud data warehouses, and SaaS applications.

You can use Data Factory to create data pipelines that move data between different sources, transform data, and load data into a variety of destinations, including

- on-premises to the cloud,

- cloud to cloud,

- cloud to on-premises, and

- even on-premises to on-premises data transfers.

This flexibility ensures that regardless of your infrastructure setup, ADF enables smooth data flows and integration across your entire ecosystem.

ADF is a serverless, cloud-based solution that offers excellent security and flexibility at scale.

- Easy to use: Its architecture has drag-and-drop features that cover ingestion, preparation, transformation, and serving the data for analytics with no-code/low-code features.

- Scalability: Azure Data Factory is scalable, so you can easily increase the capacity of your data pipelines as your data needs grow.

- Reliability: Azure Data Factory is reliable, so you can be confident that your data pipelines will run successfully.

- Cost-effective: The pay-as-you-go model means you would be spending only on your consumed resources.

- Powerful: The pipelines facilitate the orchestration and monitoring of end-to-end jobs. It connects to all enterprise databases and serves your ETL/ELT needs with a robust solution.

- Intelligent: ADF offers intelligent and autonomous ETL, and helps in data democratization by empowering citizen data professionals.

2. Extensive Connector Library:

In the age of social media and voluminous data, pulling and merging data from various data sources could be complex, time-consuming, and expertise oriented. The backstage job of connecting with data stores could become a nightmare with multiple tools, plug-ins, utilities, and vendors.

Azure Data Factory offers an extensive collection of more than 90 connectors, providing a vast range of options to connect to different data sources. ADF ensures seamless integration, enabling you to leverage data from various platforms and systems.

Image Source: Microsoft Azure

Image Source: Microsoft Azure

- Connects with Bigdata sources like HDFS, Google BigQuery, etc.

- Connects with enterprise-level data warehouses like Teradata and Oracle Exadata.

- Connects with SaaS apps like Service Now and Salesforce.

- Supports various file formats like JSON, XML, Excel, and Delta.

- To connect with a data source without a built-in connector, you can use an ODBC connector or similar facilities.

With these connectors, you can effortlessly bridge the gap between disparate data sources, unlocking valuable insights.

3. Run On-Premise SSIS packages

If you want to move your SSIS packages due to the limitations of its desktop-based features and heavy memory consumption on servers, ADF offers the same with smoothness. Here are some great benefits when you go cloud from on-premise using ADF:

- Against the license-based SSIS, ADF offers a pay-as-you-go model that lowers your TCO up to 88%.

- An easy-to-migrate solution with fully compatible features to support SSIS packages.

- ADF comes with a deployment wizard tool and abundant how-to guides and other support materials.

- ADF helps in data cataloging with data lineage, tracking, and tagging data from disparate sources—a vitality for data democratization.

- Analytics and machine learning initiatives materialize easily with hybrid data that is cleansed, consumable, and insight-ready.

Image Source: Microsoft Azure

4. DataOps

Continuous integration (CI) and continuous delivery (CD) are the processes of testing every change and delivering the same at the earliest possible time. Shrinking the time-lapse between development (or change), testing, and deployment in a data-based project is called DataOps, similar to DevOps.

ADF promotes CI/CD by connecting and supporting Azure DevOps or GitHub. You can automate deployments of various branches into Dev, Test, and Prod environment. ADF uses Azure Resource Manager (ARM) templates to define and store the configuration of ADF entities like pipelines, triggers, datasets, etc., of your project.

You can utilize any of the below-mentioned methods to promote your data factory from one environment to another:

- Automatically deploy the data factory to a different environment using Azure pipelines.

- Using an Azure Resource Manager, manually uploading an ARM template.

5. Advanced Data Transformation at Scale

Azure Data Factory provides a variety of data transformation capabilities, including data cleansing, data enrichment, and data aggregation. You can use Data Factory to transform data in a variety of ways, including using built-in transformation tools, custom code, and machine learning.

In clear terms, you can go beyond simple data movement and perform complex transformations on your data. ADF supports running SQL scripts and stored procedures, allowing you to implement sophisticated data transformations and manipulations. This capability ensures that you can easily shape and mold your data to meet specific business requirements, unlocking the full potential of your data assets.

Mapping data flow, a feature of ADF, is the visually designed data transformations that help citizen data professionals:

- To derive graphical logic without writing codes,

Image Source: Microsoft Azure

- To perform your ETL and ELT tasks visually,

- To save time from writing codes, figuring out the source and destination, ports with a few clicks,

- To perform various data wranglings operations like data cleansing, aggregations, transformation, and conversions visually on a canvas.

- The Azure-managed Apache Spark behind the ADF takes charge of the code generation.

- No need to understand Spark programming or clusters.

- Can test the logic in the ADF’s debug mode.

Citizen data professionals can prepare and wrangle data using the Power Query feature in ADF. This comes with the following benefits:

- UI similar to Microsoft Excel for the data prep process.

- The process is visual and agile and needs no coding expertise.

- Analyze data to find anomalies and outliers and validate your data.

- Availability of various data prep functions like combining tables, adding and updating columns, row management, etc.

6. Run Your Code on Any Compute

ADF supports various external computing environments to process your data. Some of the compute engines you can connect your ADF project with are:

- Azure Databricks

- Azure HDInsight

- Azure Synapse Analytics

- Azure Machine Learning

- Azure Function

- Azure Batch

7. Intuitive Visual Interface:

Azure Data Factory provides a user-friendly visual interface that simplifies the development and monitoring of data pipelines. The visual interface allows you to create, configure, and manage your data workflows using a drag-and-drop approach. It provides an intuitive way to define the linkages and flow between various data sources, transformations, and destinations. This visual representation enhances collaboration and makes it easier to understand and maintain complex data integration workflows.

8. Robust Monitoring and Management

Efficient monitoring and management are crucial for ensuring the smooth functioning of data integration processes. Azure Data Factory offers robust monitoring capabilities, allowing you to gain insights into the performance and health of your data pipelines. You can track data movement, monitor execution status, and diagnose and troubleshoot issues through comprehensive logs and metrics. This visibility empowers you to optimize and fine-tune your data workflows, ensuring efficient data integration operations.

Monitoring

Image Source: Logesys Solutions

Management

Image Source: Logesys Solutions

9. Data Orchestration & Customizable Alerts

Azure Data Factory provides a variety of data orchestration capabilities, including scheduling, monitoring, and alerting. To stay proactive and responsive, you can use Data Factory to schedule your data pipelines to run on a regular basis, monitor the status of your data pipelines, and receive alerts if there are any problems with your data pipelines. Azure Data Factory enables you to set up alerts that trigger notifications via email. This feature ensures that you are promptly notified of any issues or anomalies, enabling you to take immediate action and minimize potential disruptions. By leveraging alerts, you can maintain data integrity and ensure smooth data flow within your organization. Read More.....

Alerts can be done in a few ways. The most common 2 ways are Logics apps and Inbuilt alerts. The inbuilt alert is present within the monitor tab in ADF.

Logics Apps

Image Source: Logesys Solutions

Inbuilt alerts

Image Source: Logesys Solutions

10. Security & Compliance:

Azure Data Factory provides a variety of data governance & data security capabilities, including data lineage, data quality, data encryption, data access control, and data auditing. You can use Data Factory to track the lineage of your data, ensure the quality & confidentiality of your data, comply with data regulations, and protect your data from unauthorized access.

- ADF is available in more than 25 regions globally, complying with the local regulatory norms of all areas.

- Data Factory is certified for various ISO certificates, HIPPA BAA, HITRUST, and CSA STAR, making it a highly secured tool.

- AFD protects every connected database and data store credentials by encrypting with Microsoft-issued certificates, which are renewed and rotated every 2 years.

- All data in transit among the data stores are exchanged via HTTPS or TLS-secured channels, whichever they support.

- If a datastore supports encryption of data at rest, ADF recommends enabling that feature.

- Azure Key Vault, a core component of any Azure solution, holds credentials values while interacting with any linked services. This extra layer of security is recommended. If used, ADF should retrieve values from this vault using its own Managed Service Identity (MSI).

- ADF inherits all other security guidelines and regulatory policies that Azure follows to function as a secured cloud entity.

All these cloud-based features of Microsoft Azure come by default, making it one of the best-in-class and secure cloud-based ETL tools.

How Logesys Can Help with ADF Implementation?

Logesys teams have many years of rich experience in Microsoft cloud solutions and products. We have been working with our clients to move their data to the cloud with smooth and cost-effective projects.

If you are planning to move your SSIS packages to a cloud-based solution or your on-premise data to Azure, we would be happy to help. Initiate a discussion with us here, and allow us to help you start your ADF and cloud journey.